Cartesian control¶

NAOqi Motion - Overview | API | Tutorial

What it does¶

These APIs are dedicated to control directly the Effectors of the robot in a Cartesian space using an inverse kinematics solver.

Each Effector can be controlled individually, or in parallel with other.

- There are two kinds of inverse kinematics (IK) solver in ALMotion module:

- a classical IK solver which uses only the joints of the effector chain to reach a target.

- a generalized IK solver (also called Whole Body control) which uses all the robot joints to reach a target.

- There are two ways of controlling an effector:

- animation methods (time fixed, blocking function):

- reactive methods (could be changed every ALMotion cycle, non blocking function):

There are also some whole Body user friendly function (balance control, safe Cartesian command and so on).

The details of these functions are in Whole Body control section.

How it works¶

The geometric model of the robot gives the effector positions (![X = [P_x, P_y, P_z, P_{wx},

P_{wy}, P_{wz}]](../../_images/math/d77059c5724efe077bf26e4a48e192d801b25198.png) ) relative to an absolute space in function of all the joint positions

(

) relative to an absolute space in function of all the joint positions

(![q = [q_1,...,q_n]](../../_images/math/a230cc847d8eff95637915fe9507aa51c575c1a3.png) ).

).

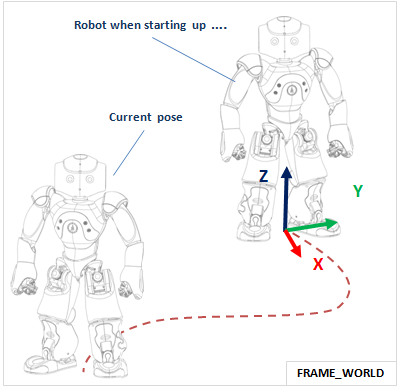

The direct cinematic model is the derivative of the above equation with respect to time.

where  is also called the Jacobian matrix.

is also called the Jacobian matrix.

In our case, we want to control an effector and deduce the joint position. So, we look at the inverse kinematic model:

In many cases,  is not invertible directly (matrix not square), in our case we use the

Moore-Penrose pseudoinverse to solve mathematically this problem.

is not invertible directly (matrix not square), in our case we use the

Moore-Penrose pseudoinverse to solve mathematically this problem.

Note

The classical IK solver due to robot singularity configuration could create huge joint velocity and the robot could lose balance and fall.

For this reason, all Cartesian motions should be tested in a simulator before being tried on the robot.

Limitations and performances¶

Pepper¶

Pepper¶

Most of the methods are unusable for Pepper.

Still available methods:

Do not use:

- ALMotionProxy::positionInterpolation()

- ALMotionProxy::positionInterpolations()

- ALMotionProxy::setPosition()

- ALMotionProxy::setPositions()

- ALMotionProxy::changePosition()

- ALMotionProxy::transformInterpolation()

- ALMotionProxy::transformInterpolations()

- ALMotionProxy::setTransform()

- ALMotionProxy::setTransforms()

- ALMotionProxy::changeTransform()

Getting started¶

Essential information to deal with Cartesian control:

Position and orientation¶

Position and orientation of a part of the robot are controlled using two different systems:

- Position6D and

- Transform.

Definitions and calculation rules are available in the ALMath library: libalmath Overview.

Effectors¶

An Effector is a predefined 3D point in the robot and it’s generally the end of a chain.

We choose these points because with a biped robot, we want for example:

- control the hand in order to catch an object or

- control the foot to kick in a ball.

For further details, see: NAO’s Effectors.

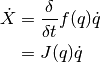

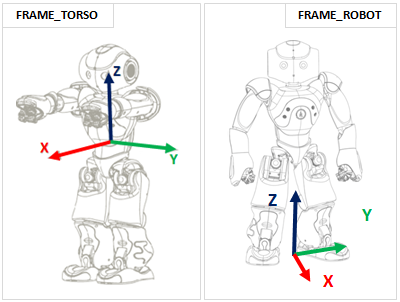

Frames¶

When creating a command for a robot, much attention needs to be placed on the space used to define the command, as a mistake in space could lead to disastrous results.

- FRAME_TORSO: this is attached to the robot’s torso reference, so moves with the robot as he walks and changes orientation as he leans. This space is useful when you have very local tasks, that make sense in the orientation of the torso frame.

- FRAME_ROBOT: this is average of the two feet positions projected around a vertical z axis. This space is useful, because the x axis is always forwards, so provides a natural ego-centric reference.

- FRAME_WORLD: this is a fixed origin that is never altered. It is left behind when the robot walks, and will be different in z rotation after the robot has turned. This space is useful for calculations which require an external, absolute frame of reference.

When executing a task, the space is determined when the task begins, and remains constant throughout the rest of the interpolation. i.e. the interpolation, once defined, will not change as the reference changes due to the legs moving or the torso orientation changing.

Axis Masks¶

When controlling the robot’s arms, he does not have enough degrees of freedom to be able to realize a task that has six constraints.

Using an Axis Mask, you can define which axis you wish to control. The axis mask is passed as a single parameter (an int) which can be calculated using the definitions below:

#define AXIS_MASK_X 1

#define AXIS_MASK_Y 2

#define AXIS_MASK_Z 4

#define AXIS_MASK_WX 8

#define AXIS_MASK_WY 16

#define AXIS_MASK_WZ 32

import almath

#An Axis Mask for Position only: 7

axisMask = almath.AXIS_MASK_X + almath.AXIS_MASK_Y + almath.AXIS_MASK_Z

The axis mask is executed in the same space used to define your task. It has the effect of liberating the constraint on each axis which is not part of the mask. Note carefully that a mask in space FRAME_WORLD is likely to be very different to one in space FRAME_TORSO.

Note

For the Torso, LLeg and RLeg effectors, the axes which are not part of the mask will have a fixed position. i.e. they will not move while performing the task.

For the Head, LArm and RArm effectors, the axes which are not part of the mask are not constrained. i.e. free to move in order to perform the task.

SE3 Interpolation¶

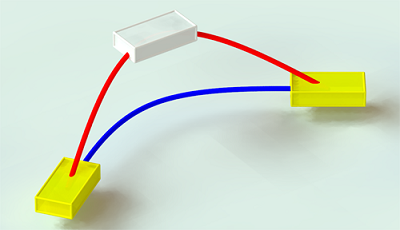

SE3 Interpolation is used for all interpolations that are defined in Cartesian Space. It provides a spline-like interpolation which allows for initial speeds and points of passage to be taken into account, ensuring smooth trajectories that respect speed constraints.

Warning

If the desired motion is unfeasible, the robot can lose balance and fall. All Cartesian motions should be tested in a simulator before being tried on the robot.

Use Cases¶

Case 1: Arm trajectory¶

This example show a simple path composed of two control points, the target and the current position. It uses relative coordinates, so the current position is all zeros.

# -*- encoding: UTF-8 -*-

'''Cartesian control: Arm trajectory example'''

''' This example is only compatible with NAO '''

import argparse

import motion

import almath

from naoqi import ALProxy

def main(robotIP, PORT=9559):

''' Example showing a path of two positions

Warning: Needs a PoseInit before executing

'''

motionProxy = ALProxy("ALMotion", robotIP, PORT)

postureProxy = ALProxy("ALRobotPosture", robotIP, PORT)

# Wake up robot

motionProxy.wakeUp()

# Send robot to Stand Init

postureProxy.goToPosture("StandInit", 0.5)

effector = "LArm"

frame = motion.FRAME_TORSO

axisMask = almath.AXIS_MASK_VEL # just control position

useSensorValues = False

path = []

currentTf = motionProxy.getTransform(effector, frame, useSensorValues)

targetTf = almath.Transform(currentTf)

targetTf.r1_c4 += 0.03 # x

targetTf.r2_c4 += 0.03 # y

path.append(list(targetTf.toVector()))

path.append(currentTf)

# Go to the target and back again

times = [2.0, 4.0] # seconds

motionProxy.transformInterpolations(effector, frame, path, axisMask, times)

# Go to rest position

motionProxy.rest()

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--ip", type=str, default="127.0.0.1",

help="Robot ip address")

parser.add_argument("--port", type=int, default=9559,

help="Robot port number")

args = parser.parse_args()

main(args.ip, args.port)

Case 2: Torso and Foot trajectories¶

This example shows how to do simultaneous trajectories of the Torso and the legs. The non-controlled right leg, will behave as if it received a zero relative command.

- Lower the Torso and move to a point above the Right Leg

- Move and turn the Left Foot outwards, with an upright intermediate position.

# -*- encoding: UTF-8 -*-

'''Cartesian control: Torso and Foot trajectories'''

''' This example is only compatible with NAO '''

import argparse

import motion

import almath

from naoqi import ALProxy

def main(robotIP, PORT=9559):

''' Example of a cartesian foot trajectory

Warning: Needs a PoseInit before executing

'''

motionProxy = ALProxy("ALMotion", robotIP, PORT)

postureProxy = ALProxy("ALRobotPosture", robotIP, PORT)

# Wake up robot

motionProxy.wakeUp()

# Send robot to Stand Init

postureProxy.goToPosture("StandInit", 0.5)

frame = motion.FRAME_WORLD

axisMask = almath.AXIS_MASK_ALL # full control

useSensorValues = False

# Lower the Torso and move to the side

effector = "Torso"

initTf = almath.Transform(

motionProxy.getTransform(effector, frame, useSensorValues))

deltaTf = almath.Transform(0.0, -0.06, -0.03) # x, y, z

targetTf = initTf*deltaTf

path = list(targetTf.toVector())

times = 2.0 # seconds

motionProxy.transformInterpolations(effector, frame, path, axisMask, times)

# LLeg motion

effector = "LLeg"

initTf = almath.Transform()

try:

initTf = almath.Transform(motionProxy.getTransform(effector, frame, useSensorValues))

except Exception, errorMsg:

print str(errorMsg)

print "This example is not allowed on this robot."

exit()

# rotation Z

deltaTf = almath.Transform(0.0, 0.04, 0.0)*almath.Transform().fromRotZ(45.0*almath.TO_RAD)

targetTf = initTf*deltaTf

path = list(targetTf.toVector())

times = 2.0 # seconds

motionProxy.transformInterpolations(effector, frame, path, axisMask, times)

# Go to rest position

motionProxy.rest()

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--ip", type=str, default="127.0.0.1",

help="Robot ip address")

parser.add_argument("--port", type=int, default=9559,

help="Robot port number")

args = parser.parse_args()

main(args.ip, args.port)

Case 3: Multiple Effector Trajectories¶

The goal of this example is to simultaneously control three effectors: the Torso, the Left Arm and the Right Arm.

- Torso motion: non-blocking method;

- Right Arm motion: blocking method during the first half of torso motion;

- Left Arm motion: blocking method during the last half of the torso motion.

almotion_cartesianTorsoArm1.py

# -*- encoding: UTF-8 -*-

'''Cartesian control: Multiple Effector Trajectories'''

''' This example is only compatible with NAO '''

import argparse

import motion

import almath

from naoqi import ALProxy

def main(robotIP, PORT=9559):

''' Simultaneously control three effectors:

the Torso, the Left Arm and the Right Arm

Warning: Needs a PoseInit before executing

'''

motionProxy = ALProxy("ALMotion", robotIP, PORT)

postureProxy = ALProxy("ALRobotPosture", robotIP, PORT)

# Wake up robot

motionProxy.wakeUp()

# Send robot to Stand Init

postureProxy.goToPosture("StandInit", 0.5)

frame = motion.FRAME_WORLD

coef = 0.5 # motion speed

times = [coef, 2.0*coef, 3.0*coef, 4.0*coef]

useSensorValues = False

# Relative movement between current and desired positions

dy = +0.03 # translation axis Y (meters)

dz = -0.03 # translation axis Z (meters)

dwx = +8.0*almath.TO_RAD # rotation axis X (radians)

# Motion of Torso with post process

effector = "Torso"

path = []

initTf = almath.Transform(motionProxy.getTransform(effector, frame, useSensorValues))

# point 1

deltaTf = almath.Transform(0.0, -dy, dz)*almath.Transform().fromRotX(-dwx)

targetTf = initTf*deltaTf

path.append(list(targetTf.toVector()))

# point 2

path.append(list(initTf.toVector()))

# point 3

deltaTf = almath.Transform(0.0, dy, dz)*almath.Transform().fromRotX(dwx)

targetTf = initTf*deltaTf

path.append(list(targetTf.toVector()))

# point 4

path.append(list(initTf.toVector()))

axisMask = almath.AXIS_MASK_ALL # control all the effector axes

motionProxy.post.transformInterpolations(effector, frame, path,

axisMask, times)

# Motion of Arms with block process

frame = motion.FRAME_TORSO

axisMask = almath.AXIS_MASK_VEL # control just the position

times = [1.0*coef, 2.0*coef] # seconds

# Motion of Right Arm during the first half of the Torso motion

effector = "RArm"

path = []

currentTf = motionProxy.getTransform(effector, frame, useSensorValues)

targetTf = almath.Transform(currentTf)

targetTf.r2_c4 -= 0.04 # y

path.append(list(targetTf.toVector()))

path.append(currentTf)

motionProxy.transformInterpolations(effector, frame, path, axisMask, times)

# Motion of Left Arm during the last half of the Torso motion

effector = "LArm"

path = []

currentTf = motionProxy.getTransform(effector, frame, useSensorValues)

targetTf = almath.Transform(currentTf)

targetTf.r2_c4 += 0.04 # y

path.append(list(targetTf.toVector()))

path.append(currentTf)

motionProxy.transformInterpolations(effector, frame, path, axisMask, times)

# Go to rest position

motionProxy.rest()

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--ip", type=str, default="127.0.0.1",

help="Robot ip address")

parser.add_argument("--port", type=int, default=9559,

help="Robot port number")

args = parser.parse_args()

main(args.ip, args.port)

Case 4: Apply rotation¶

The goal of this example is to apply 15 degrees rotation around axis y to the Left Arm. In this case, it is difficult to compute the desired target as a position6D, from current position6D of LArm and rotation of 15 degrees around the Y axis. We cannot add 15 degrees to the wy component. In this general case, Transform is much simpler to use.

almotion_advancedCreateRotation.py

# -*- encoding: UTF-8 -*-

import argparse

import motion

import almath

from naoqi import ALProxy

def main(robotIP, PORT=9559):

motionProxy = ALProxy("ALMotion", robotIP, PORT)

postureProxy = ALProxy("ALRobotPosture", robotIP, PORT)

# Wake up robot

motionProxy.wakeUp()

# Send NAO to Pose Init

postureProxy.goToPosture("StandInit", 0.5)

# Get transform of Left Arm in Torso frame

chainName = "LArm"

frame = motion.FRAME_TORSO

useSensor = False

tf = almath.Transform(motionProxy.getTransform(chainName, frame, useSensor))

# Compute desired transform: rotation of -20 degrees around the Z axis

tfEnd = almath.Transform.fromRotZ(-20.0*almath.TO_RAD)*tf

tfEnd.r1_c4 = tf.r1_c4

tfEnd.r2_c4 = tf.r2_c4

tfEnd.r3_c4 = tf.r3_c4

# Set the desired target

axisMask = 63 # rotation

fractionMaxSpeed = 0.1

transform = [val for val in tfEnd.toVector()]

motionProxy.setTransforms(chainName, frame, transform, fractionMaxSpeed, axisMask)

# Go to rest position

motionProxy.rest()

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--ip", type=str, default="127.0.0.1",

help="Robot ip address")

parser.add_argument("--port", type=int, default=9559,

help="Robot port number")

args = parser.parse_args()

main(args.ip, args.port)