Face Characteristics - Get Expression¶

Cannot be tested on a simulated robot.

Cannot be tested on a simulated robot.

When you run this behavior, the robot will track your face and tell you the expression it could detect on your face!

In this behavior the robot is only able to identify the facial expression of one person at a time!

| Step | Action |

|---|---|

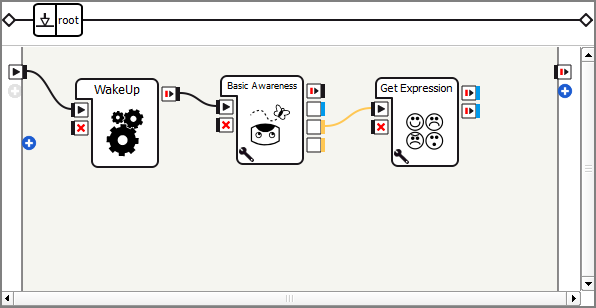

| Drag and drop the Movement > Motors > WakeUp box onto the Flow Diagram panel. | |

| Connect its input to the diagram input. | |

| Test | The robot stands up. |

| Drag and drop the Sensing > Vision > Surroundings > Basic Awareness box onto the Flow Diagram panel. | |

| Connect its input to the output of the WakeUp box. | |

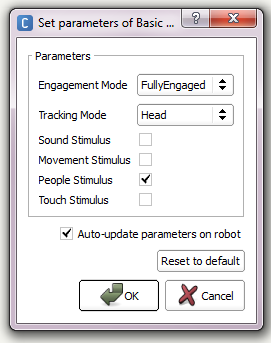

Click on its  parameter button. parameter button. |

|

Deselect all stimuli but the People Stimulus.

|

|

| Test | The robot only tracks human faces, but no sounds, movements etc. |

| Drag and drop the Sensing > Human Understanding > Get Expression box onto the Flow Diagram panel. | |

Connect its input to the Human Tracked output of the Basic Awareness box.

|

|

| Test | The robot tries to identify the facial expression of the person in front of him. |

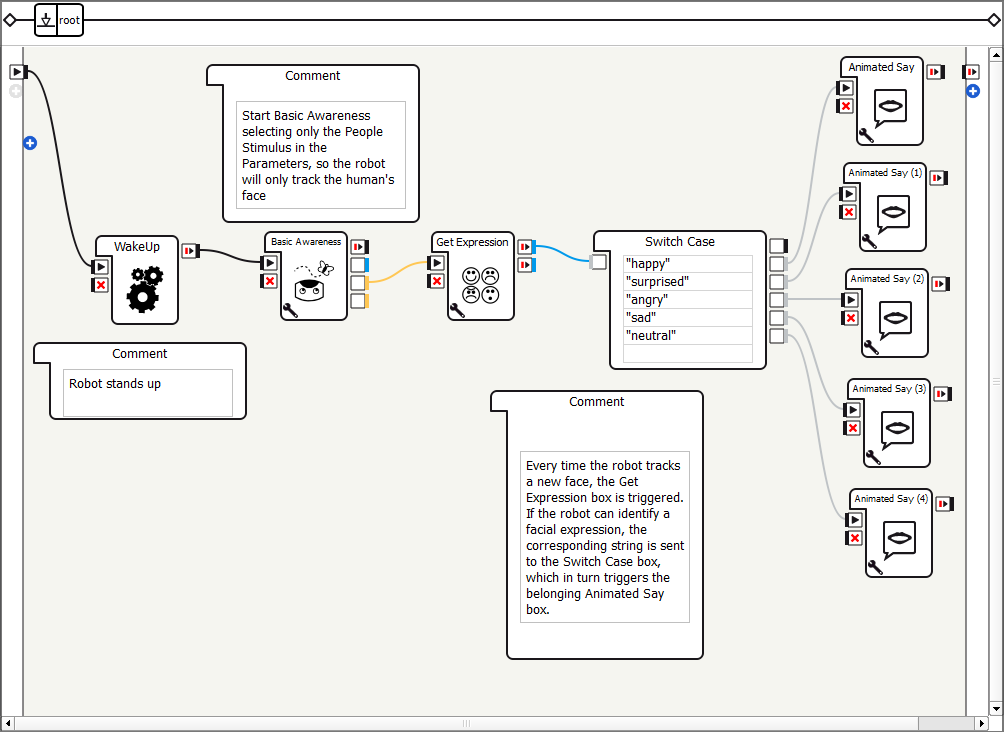

| Drag and drop the Programming > Logic > Switch Case box onto the Flow Diagram panel. | |

| Connect its input to the onStopped output of the Get Expression box. | |

| Remove all existing cases from the Switch Case box and add the cases “happy”, “surprised”, “angry”, “sad” and “neutral”. | |

| Test | When the robot detected a facial expression, the Switch Case box triggers the corresponding output. |

| Drag and drop 5 Speech > Creation > Animated Say boxes onto the Flow Diagram panel. | |

Connect each of them with one of the grey outputs of the Switch Case box.

|

|

| Double click on one of the Animated Say boxes and adjust the text in the Localized Text box as desired. Repeat this procedure for the other 4 Animated Say boxes. | |

| Test | Depending on which Animated Say box was triggered by the Switch Case box, the robot says what is written in the inner Localized Text box. |