Sample 4: a first solitary application¶

Here is a typical, well designed, simple solitary application.

Content outline: when entering Engagement Zone 2 for at least 10 seconds, Pepper will greet you. The application may be launched again 60 seconds later.

Guided Tour¶

Let’s discover how it works.

The main behavior of this application has two Launch Trigger Conditions and contains 2 Timeline boxes: Human Detected Animation and Hello Animation.

Launch Trigger Conditions¶

Aim

Ensure that Pepper:

- greets the person who has been detected in Engagement Zone 2 for at least 10 seconds,

- greets somebody again at least 60 seconds after the previous greet.

How it works

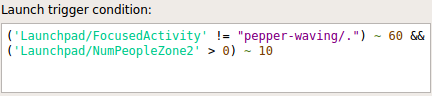

Click on the Properties button in the Project Files panel to open the Project Properties window. Then click on the root behavior, to see the Launch trigger conditions of the behavior.

Both conditions need to be fulfilled at the same time due to the logical && operator, otherwise the application cannot be launched.

Let’s read and understand the two conditions:

- the first condition checks if the focused activity has not been Pepper Waving for the last 60 seconds (~ 60) and

- the second condition checks if there is at least one person in Zone2 (>0) for the last 10 seconds.

Human Detected Animation¶

Aim

This Timeline box launches an animation with a corresponding sound, making the robot reveal it detected a person.

How it works

This is the first box being triggered, because it is directly linked to the onStart input of the Application.

Hello Animation¶

Aim

Hello Timeline box mixes:

- body movements,

- a sound from a sound file,

- an image displayed on Pepper’s tablet.

How it works

This box is triggered when the Human Detected box ends successfully, thanks to its onStart input linked to the onStopped output of the Human Detected box.

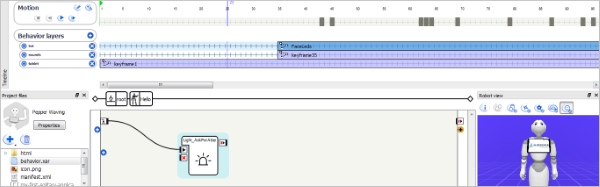

Double-click the Hello Timeline box to open it.

Let’s read the 4 main layers.

- Motion: The motion keyframes of the dance animation, which represent the position of the robot and its body parts at a certain time.

- led behavior layer: A Light_AskForAttentionEyes box. It changes the leds of the robot to blue.

- music behavior layer: A Play Soundset box. It plays a random sound from the selected sounds of the Aldebaran SoundSet.

- tablet behavior layer: A Show Image box. It displays a gif image on the tablet.

Try it!¶

Try the application.

| Step | Action |

|---|---|

In the Robot applications panel panel, click the

Package and install the current project on the robot button.

Package and install the current project on the robot button. |

|

Make sure autonomous life is on. If not, click the |

|

| Try to launch the application by entering Engagement Zone 2 and staying for at least 10 seconds. |

You may also try the behavior only, by clicking on the  Play button.

Play button.

Note that this example only works correctly on a real Pepper, since ALTabletService is not present on a virtual robot.

Make it yours!¶

Edit the part of the content you want to customize: let’s say the launch trigger conditions.

Want to package it?¶

| Step | Action |

|---|---|

Customize its properties. You can keep most of the properties as they are, but the following ones must be adapted:

|

|

| Package it. |

Turn autonomous life on button.

Turn autonomous life on button.